5.8 KiB

Vendored

Introduction

The AI / LLM features within Trilium Notes are designed to allow you to interact with your Notes in a variety of ways, using as many of the major providers as we can support.

In addition to being able to send chats to LLM providers such as OpenAI, Anthropic, and Ollama - we also support agentic tool calling, and embeddings.

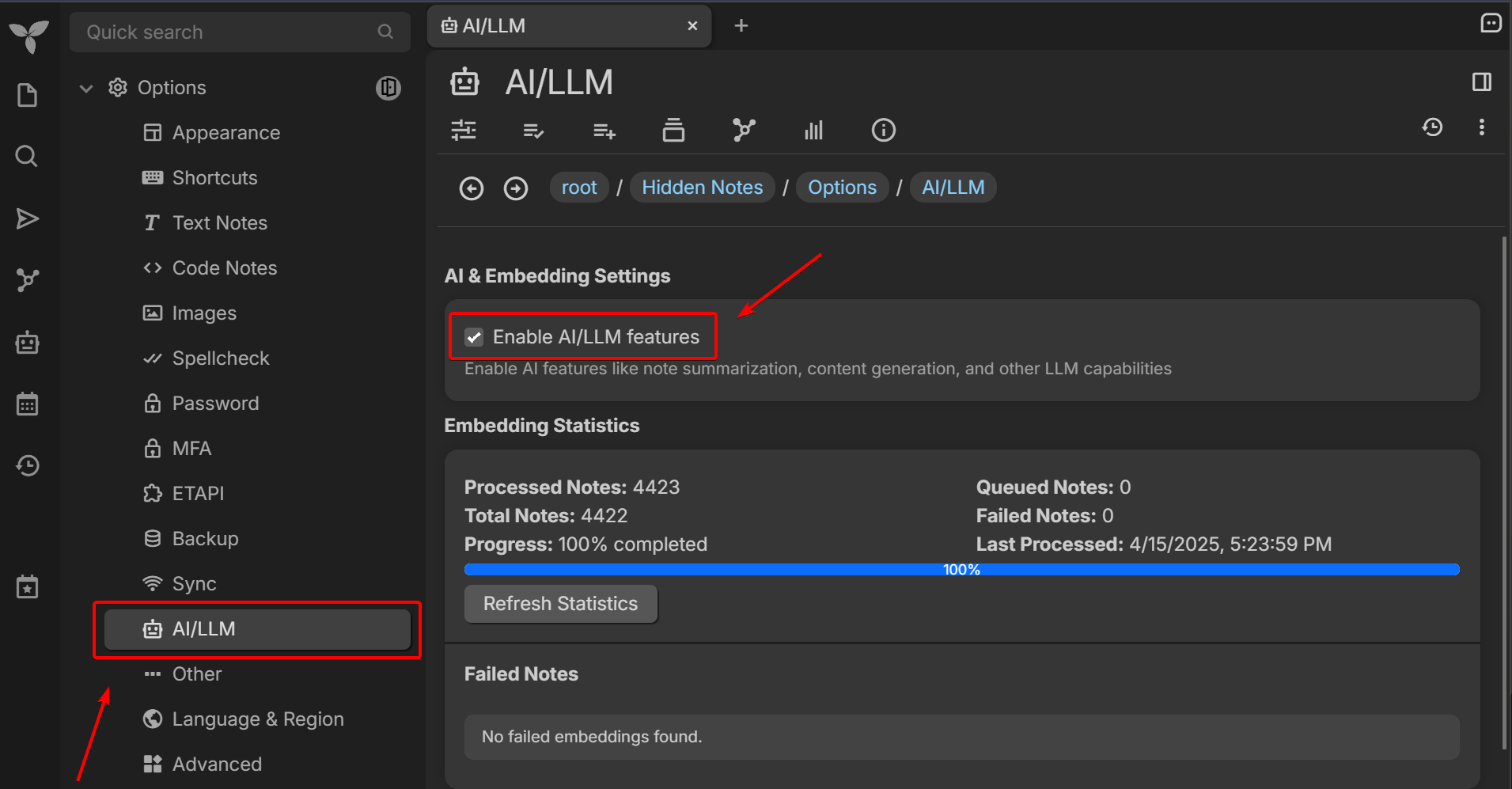

The quickest way to get started is to navigate to the “AI/LLM” settings:

Enable the feature:

Embeddings

Embeddings are important as it allows us to have an compact AI “summary” (it's not human readable text) of each of your Notes, that we can then perform mathematical functions on (such as cosine similarity) to smartly figure out which Notes to send as context to the LLM when you're chatting, among other useful functions.

You will then need to set up the AI “provider” that you wish to use to create the embeddings for your Notes. Currently OpenAI, Voyage AI, and Ollama are supported providers for embedding generation.

In the following example, we're going to use our self-hosted Ollama instance to create the embeddings for our Notes. You can see additional documentation about installing your own Ollama locally in Installing Ollama.

To see what embedding models Ollama has available, you can check out this searchon their website, and then pull whichever one you want to try out. As of 4/15/25, my personal favorite is mxbai-embed-large.

First, we'll need to select the Ollama provider from the tabs of providers, then we will enter in the Base URL for our Ollama. Since our Ollama is running on our local machine, our Base URL is http://localhost:11434. We will then hit the “refresh” button to have it fetch our models:

When selecting the dropdown for the “Embedding Model”, embedding models should be at the top of the list, separated by regular chat models with a horizontal line, as seen below:

After selecting an embedding model, embeddings should automatically begin to be generated by checking the embedding statistics at the top of the “AI/LLM” settings panel:

If you don't see any embeddings being created, you will want to scroll to the bottom of the settings, and hit “Recreate All Embeddings”:

Creating the embeddings will take some time, and will be regenerated when a Note is created, updated, or deleted (removed).

If for some reason you choose to change your embedding provider, or the model used, you'll need to recreate all embeddings.

Tools

Tools are essentially functions that we provide to the various LLM providers, and then LLMs can respond in a specific format that tells us what tool function and parameters they would like to invoke. We then execute these tools, and provide it as additional context in the Chat conversation.

These are the tools that currently exist, and will certainly be updated to be more effectively (and even more to be added!):

search_notes- Semantic search

keyword_search- Keyword-based search

attribute_search- Attribute-specific search

search_suggestion- Search syntax helper

read_note- Read note content (helps the LLM read Notes)

create_note- Create a Note

update_note- Update a Note

manage_attributes- Manage attributes on a Note

manage_relationships- Manage the various relationships between Notes

extract_content- Used to smartly extract content from a Note

calendar_integration- Used to find date notes, create date notes, get the daily note, etc.

When Tools are executed within your Chat, you'll see output like the following:

You don't need to tell the LLM to execute a certain tool, it should “smartly” call tools and automatically execute them as needed.

Overview

Now that you know about embeddings and tools, you can just go ahead and use the “Chat with Notes” button, where you can go ahead and start chatting!:

If you don't see the “Chat with Notes” button on your side launchbar, you might need to move it from the “Available Launchers” section to the “Visible Launchers” section: